About Lea Vega graduation

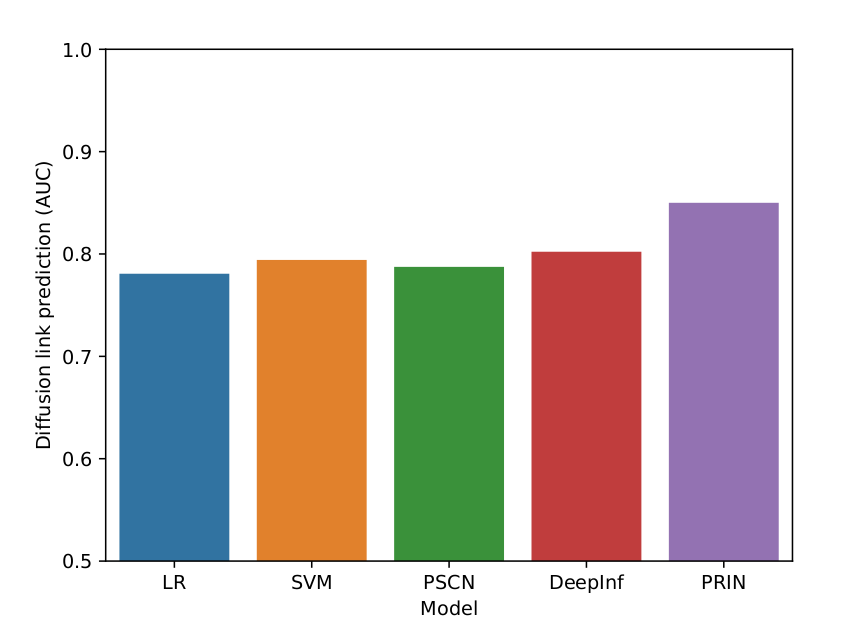

Five years ago, I was surprised that Lea Vega went to my office to ask me to be her advisor. After all I am not exactly a nice person… actually I am always asking for more to my students. But Lea did not need any “encouragment” (Do you see my terrible smile?). For example, once Dr. Paul Gader came to visit the center and was quite nicely impressed on her work. Now, after all this time and work by her using collapsed Gibbs samplers, neo4j, and a lot of data collection, she was able to finish and got good results as:

Actually, it was nice to see that a deep analysis of the problem can outperform the simple application of massive data.

So, yesterday, she was finally able to present, online the first one at Cinvestav, her graduation exam, making her a new minted PhD. She already has a nice work waiting for her, and that is good given the situation. I am quite happy, and now new arise from this. Two good news are

- We are planning to post her code, so anybody can repeat her experiments.

-

In a previous post, thoughts about sufficiency principle gave me an idea about creating something called sufficiency on-line

- How regret can be used in your estimator as you collect data by extending the idea from optimization to sufficiency